- Image via Wikipedia

Saturday, May 24, 2008 8:37 PM

Alek Icev Ads Quality Test Engineering Manager

I’d like to take a second and introduce you to the team testing the ads ranking algorithms. We’d like to think that we had a hand in the webs shift to a “content meritocracy”. As you know the Google search results are unbiased by human editors, and we don’t allow buying a spot at the top of the results list. This idea builds trust with users and allows the community to decide what’s important.

Recently, we started applying the same concept to the online advertising. We asked ourselves how to bring the same level of “content meritocracy” to the online advertising where everybody pays to have ads being displayed on Google and on our partner sites. In other words, we needed to change a system that was predominately driven by human influence into one that build its merit based on feedback from the community. The idea was that we would penalize the ranking of paid ads in several circumstances: few users were clicking on a particular ads, an ad’s landing page was not relevant, or if users don’t like an ad’s content. We want to provide our users with absolutely the most relevant ads for their click. In order to make our vision a reality we are building one of the largest online and real time machine learning labs in the world. We learn from everything: clicks, queries, ads, landing pages, conversions… hundreds of signals.

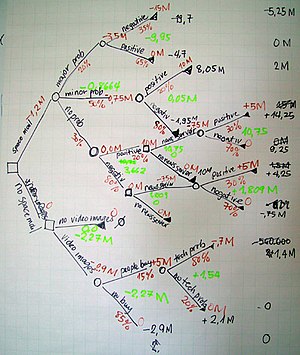

The Google Ad Prediction System brought new challenges to Test Engineering. The problem is that we needed to build the abstraction layers and metrics systems that allow us to understand if the system is organically getting better or regressing. Put another way, we started off lacking the decision tree or a perceptron that a bank or credit card company have embedded into their risk analysis, or the neural net that’s behind all broken speech recognition, or the latest tweaks on expectation-maximization algorithms needed to predict the protein transcription in the cells. The amount and versatility of the data that Google Ad Prediction models learn is immense. The amount of time needed to make the prediction is counted in milliseconds. The amount of computing resources, ads databases and infrastructure needed to serve predictions on every ad that is showing today is beyond imagination. Our challenge is to train and test learning models, that span clusters of servers and databases, simulate ads traffic and having everything compiled and running from the latest code changes submitted to the huge source depot. And the icing on the cake is to run that on 24/7 schedule.

On top of all of the technical challenges, we are also challenging the industry definition of “testing” and are believers in automated tests that are incorporated upstream into the development process and run on continuous basis. Ads Quality Test Engineering Group at Google, works on a bleeding edge testing infrastructure to test, simulate and train Google Ads Prediction systems in real time.